Data Aggregation Technology: Complete Guide to Structured Data Integration

Understand data aggregation technology

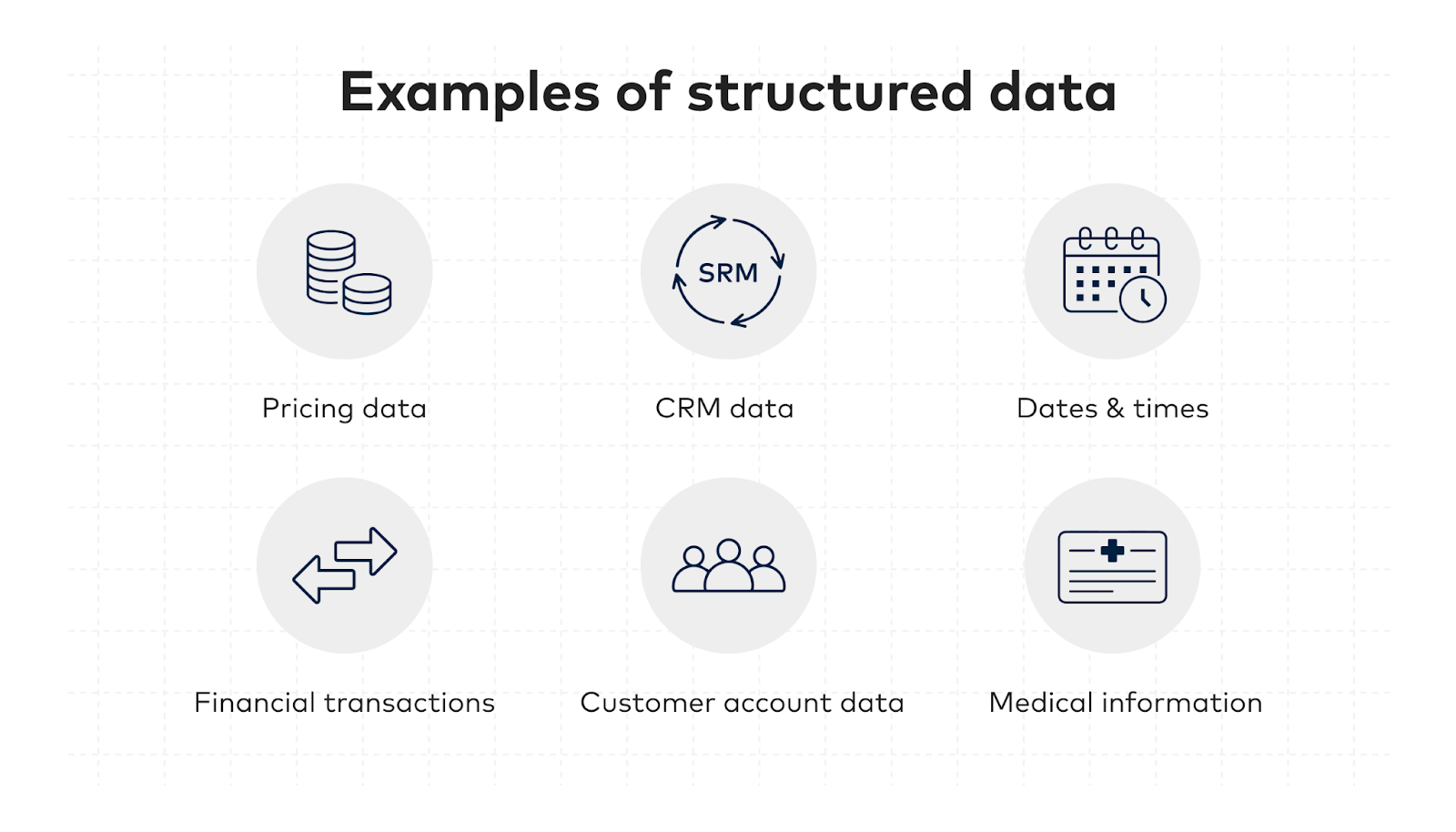

Data aggregation technology refer to the systematic process of collect, combining, and process structured data from multiple sources into a unified format for analysis, reporting, or storage. This fundamental technology power everything from business intelligence dashboards to real time analytics platforms, enable organizations to make informed decisions base on comprehensive data insights.

The core principle behind data aggregation involve extract data from various databases, applications, and systems, so transform and consolidate this information into a coherent, accessible format. This process eliminate data silos and provide a holistic view of organizational information assets.

Key components of data aggregation systems

Extract, transform, load (eETL)process

ETL represent the backbone of most data aggregation systems. The extraction phase involve pull data from source systems, which can include relational databases, cloud applications, APIs, and file systems. During transformation, the system cleanse, validates, and converts data into the target format. Lastly, the load phase deposit the process data into the destination system, typically a data warehouse or data lake.

Data integration platforms

Modern data integration platforms provide comprehensive solutions for aggregate structured data. These platforms offer visual interfaces for design data flow, pre-build connectors for popular applications, and automate scheduling capabilities. They handle complex scenarios like real time streaming data, batch processing, and hybrid cloud environments.

Application programming interfaces (aAPIs)

Apis serves as critical bridges between different systems in data aggregation scenarios. RestfulAPIss,GraphQLl endpoints, and webhook integrations enable seamless data exchange between applications. Many organizations rely onAPIi first approaches to ensure their systems caneasilyy share and consume structured data.

Types of data aggregation technologies

Traditional data warehousing

Data warehouses represent the classic approach to structured data aggregation. These systems store large volumes of historical data in a structured format optimize for analytical queries. Popular solutions include Amazon redshift, google BigQuery, and Microsoft Azure synapse analytics. Data warehouses excel at handle complex analytical workloads and support business intelligence applications.

Modern data lakes

Data lakes offer a more flexible approach to data aggregation, store both structured and unstructured data in their native formats. Technologies like Apache Hadoop, Amazon s3, and azure data lake storage provide scalable storage solutions that can accommodate diverse data types. Data lakes support schema on read approaches, allow organizations to define data structures at query time kinda than during ingestion.

Real time streaming platforms

Stream data aggregation technologies handle continuous data flow from multiple sources simultaneously. Apache Kafka, Amazon kinesis, and azure event hubs enable organizations to process and aggregate data as it arrive, support use cases like fraud detection, real time personalization, and operational monitoring.

Popular data aggregation tools and platforms

Enterprise solutions

Enterprise grade data aggregation platforms offer comprehensive features for large scale deployments. Informatics power center provide robustETLl capabilities with extensive connectivity options.Talentd data integration offer both on premises and cloud base solutions with strong data quality features.IBMmdata stagee deliver high performance data integration for complex enterprise environments.

Cloud native platforms

Cloud providers offer manage data aggregation services that eliminate infrastructure management smash. AWS glue provide serverless ETL capabilities with automatic scaling. Google cloud data flow support both batch and stream data processing. Azure data factory offer hybrid data integration with extensive connector libraries.

Open source alternatives

Open source tools provide cost-effective options for data aggregation projects. Apache NiFi offer visual data flow design with real time monitoring capabilities. Pentagon data integration provide comprehensiveETLl functionality with community support. Apache airflow enable workflow orchestration and scheduling for complex data pipelines.

Source: SDS aau.GitHub.io

Implementation strategies and best practices

Data governance and quality

Successful data aggregation require robust governance frameworks to ensure data quality, consistency, and compliance. Organizations should implement data profiling to understand source data characteristics, establish data quality rules to validate incoming information, and maintain comprehensive metadata catalogs to document data lineage and transformations.

Scalability and performance optimization

Design scalable data aggregation systems involve careful consideration of processing patterns, data volumes, and performance requirements. Implement parallel processing, optimize data transfer protocols, and leverage distribute computing frameworks can importantly improve system performance. Regular monitoring and performance tuning ensure systems continue meet evolve demands.

Security and compliance considerations

Data aggregation systems must incorporate comprehensive security measures to protect sensitive information. This includes implement encryption for data in transit and at rest, establish role base access controls, and maintain audit trails for compliance purposes. Organizations should besides consider data residency requirements and privacy regulations when design their aggregation architecture.

Source: intellspot.com

Common use cases and applications

Business intelligence and analytics

Data aggregation form the foundation of business intelligence initiatives, enable organizations to combine data from sales systems, marketing platforms, financial applications, and operational databases. This consolidated view support strategic decision-making, performance monitoring, and trend analysis across the enterprise.

Customer data platforms

Modern customer data platforms aggregate information from multiple touchpoints to create unified customer profiles. These systems combine data from websites, mobile applications, email campaigns, social media interactions, and transaction systems to provide comprehensive customer insights for personalization and marketing automation.

Financial reporting and compliance

Financial institutions and regulated industries rely intemperately on data aggregation for accurate reporting and compliance monitoring. These systems consolidate transaction data, risk metrics, and regulatory information from various sources to support financial statements, regulatory filings, and risk management processes.

Emerge trends and future directions

Artificial intelligence integration

Machine learning and artificial intelligence technologies are progressively integrated into data aggregation platforms. Ai power systems can mechanically detect data quality issues, suggest optimal transformation logic, and predict system performance bottlenecks. These capabilities reduce manual effort and improve overall system reliability.

Edge computing and IOT integration

The proliferation of internet of things devices and edge computing create new opportunities for distribute data aggregation. Edge base aggregation reduce bandwidth requirements and latency while enable real time processing of sensor data and device telemetry.

Low code and no code platforms

User-friendly interfaces and visual development environments are make data aggregation more accessible to business users. Low code platforms enable rapid development of data integration workflow without extensive programming knowledge, democratize access to data aggregation capabilities.

Challenges and considerations

Data complexity and volume

Modern organizations face challenges relate to increase data volumes, variety, and velocity. Legacy systems may struggle to handle these demands, require architectural updates or complete platform migrations. Organizations must cautiously evaluate their current and future requirements when select aggregation technologies.

Integration complexity

Connect diverse systems with different data formats, protocols, and authentication mechanisms can be complex. Organizations should prioritize solutions with extensive connector libraries and flexible integration capabilities to minimize development effort and maintenance view graph.

Cost management

Data aggregation projects can involve significant infrastructure and licensing costs. Cloud base solutions offer pay as you go pricing models that can help manage costs, but organizations must cautiously monitor usage to avoid unexpected expenses. Implement efficient data processing strategies and optimize resource utilization are crucial for cost control.

Data aggregation technology continue to evolve to meet tgrowthrow demands of digital transformation initiatives. Organizations that invest in robust, scalable aggregation platforms position themselves to leverage their data assets efficaciously, drive innovation and competitive advantage in their respective markets. Success require careful planning, appropriate technology selection, and ongoing optimization to ensure systems continue meet business requirements as they evolve.